Biography

I am a final-year PhD researcher in Natural Language Processing at the University of Edinburgh and the University of Cambridge through the European Lab for Learning and Intelligent Systems (ELLIS) Network, advised by Shay Cohen, Anna Korhonen, and Edoardo Ponti. I am a recipient of the Apple Scholars in AIML PhD Fellowship, recognizing emerging leaders in AI/ML research, and a member of the ELLIS PhD Cohort, a pan-European network for excellence in artificial intelligence.

My research investigates whether large foundation models (LFM; e.g., LLM and VLM) develop a latent world models across modalities such as text, vision, and speech. Latent world modelling refers to a model’s intrinsic understanding of the environment and agent dynamics, thus enabling reasoning and planning without an external, specialized world model. Around this, my interest spans:

-

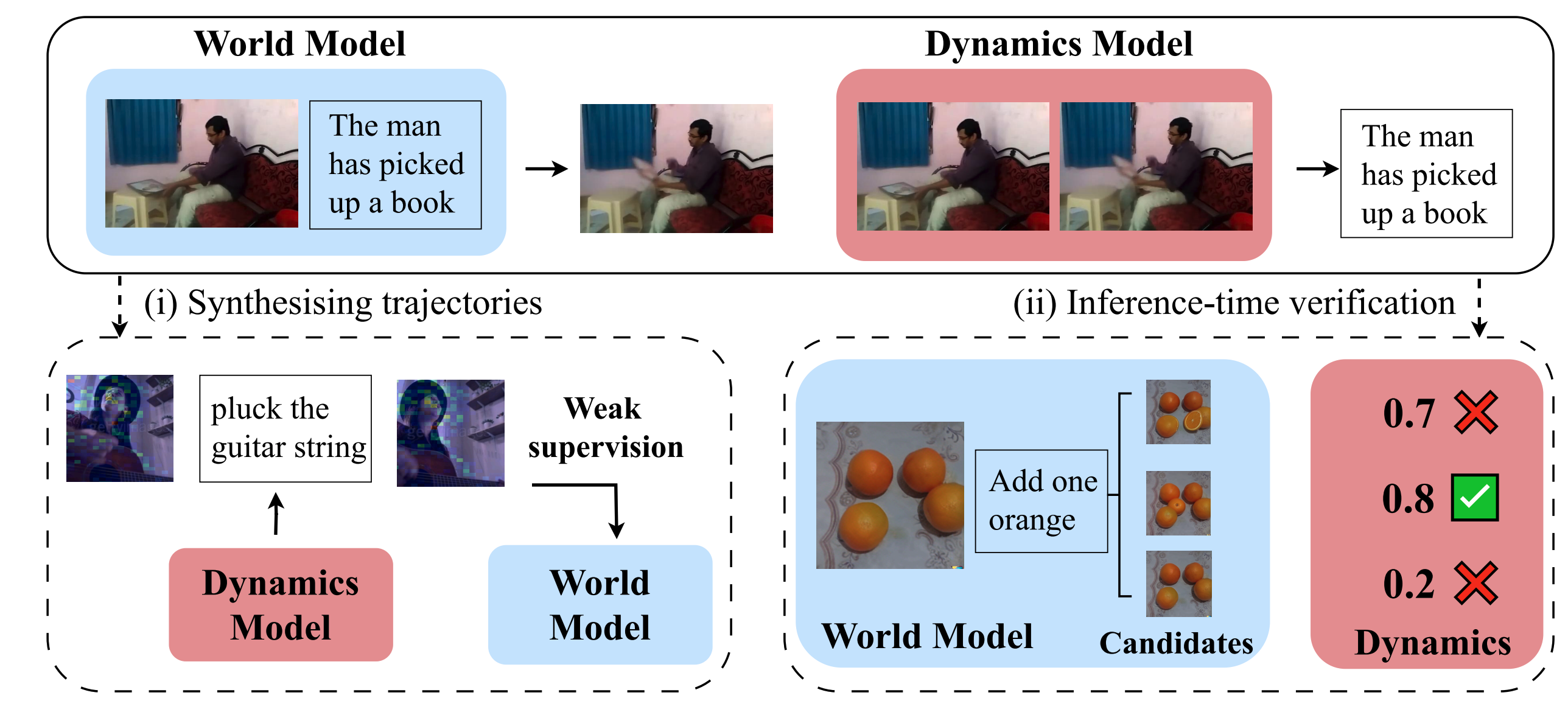

World modelling as an emergent capability — eliciting LFM to simulate future states and causal dynamics, with the novel training paradigm requiring minimum supervision. (🌀 SWIRL, BoostrapWM)

-

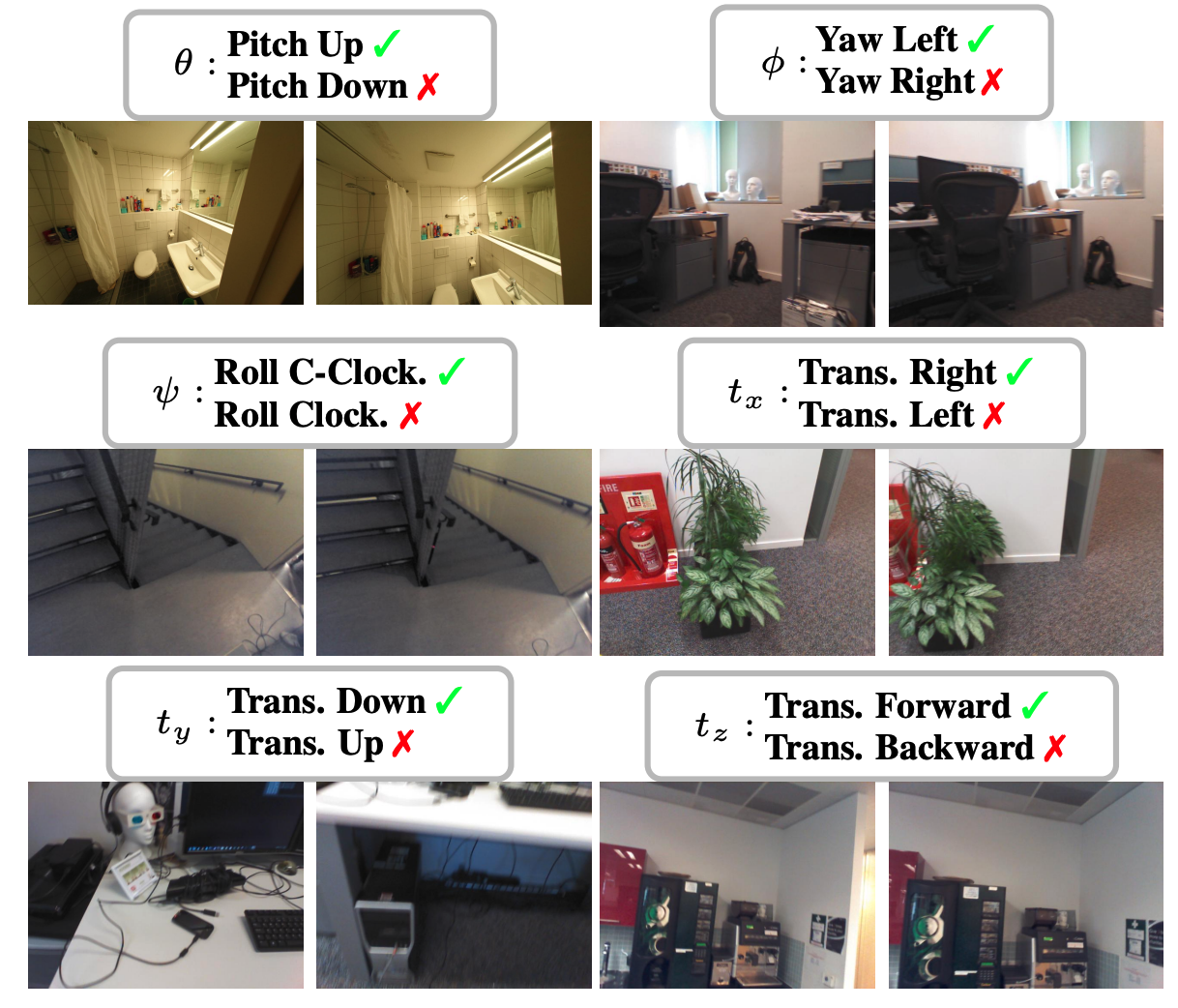

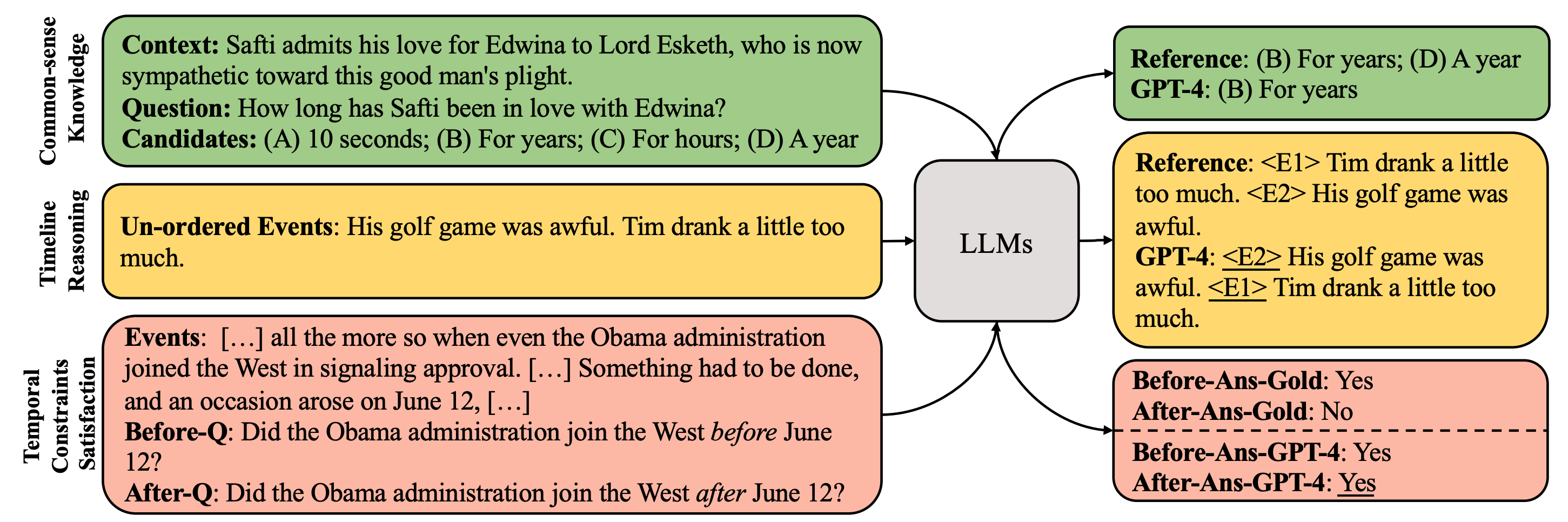

Language grounding — examining how abstract linguistic representations connect to physical and perceptual reality (e.g., spatial/temporal system) in LFM. (⏳ TemporalGrounding, SpatialGrounding)

-

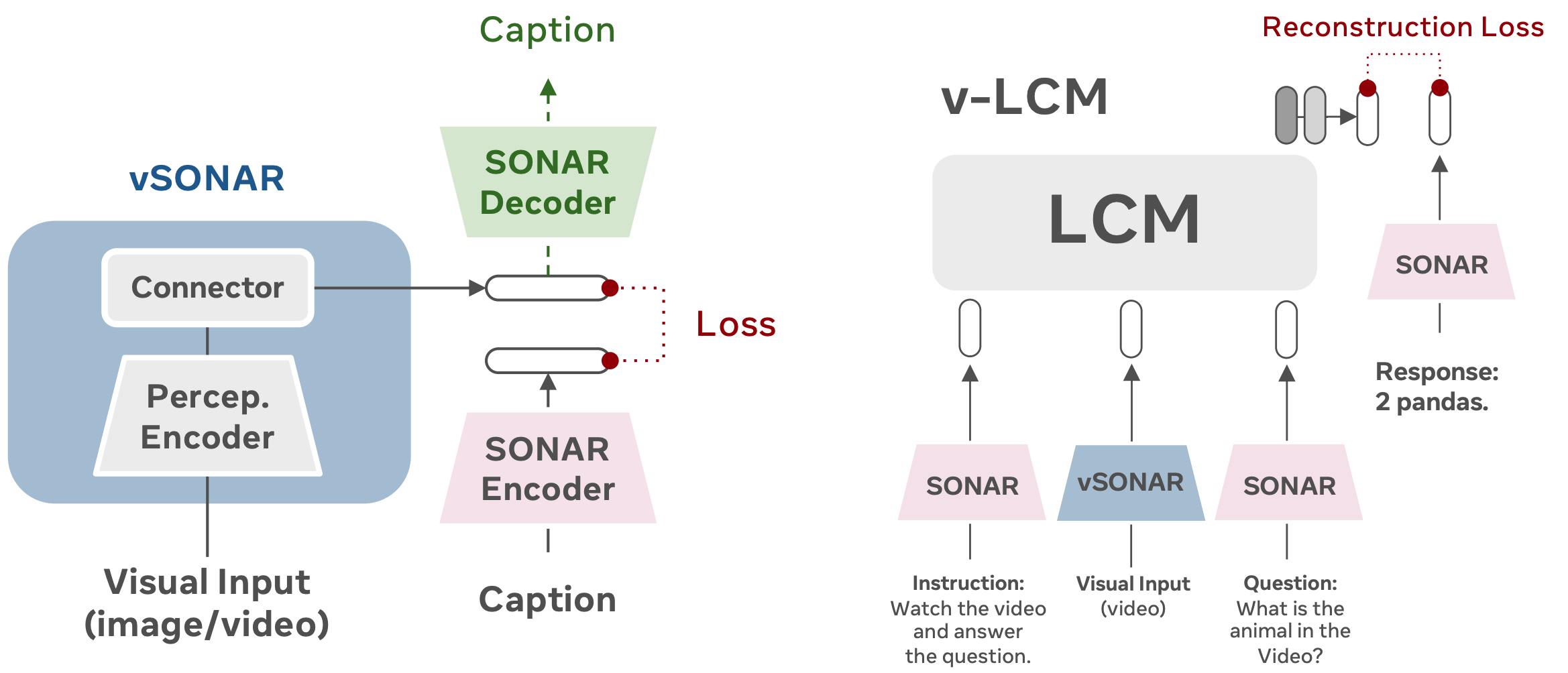

Next-generation model architecture — advancing unified architecture for the omnimodal FM with any-to-any modality, serving as the substrate for emergent latent world models (💭 vLCM).

-

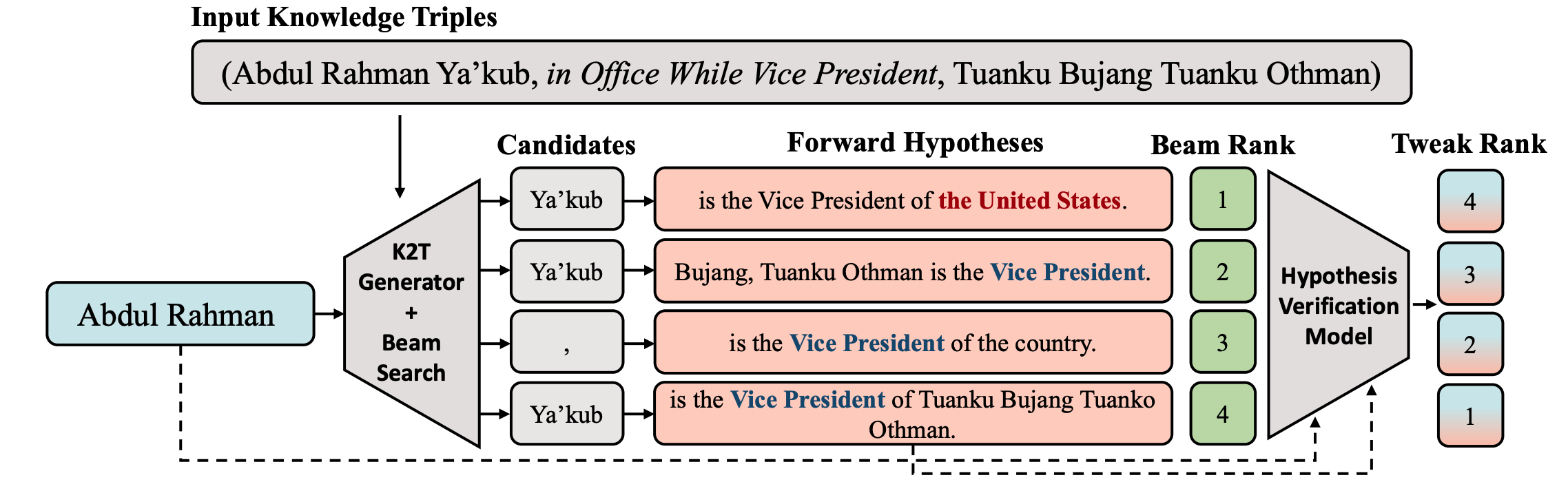

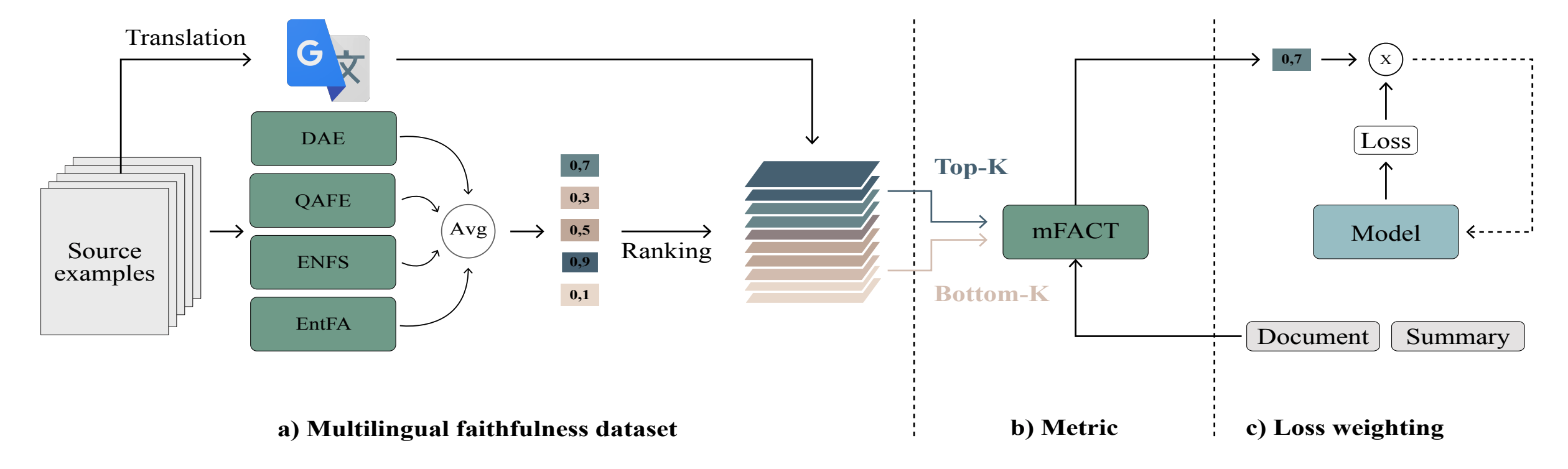

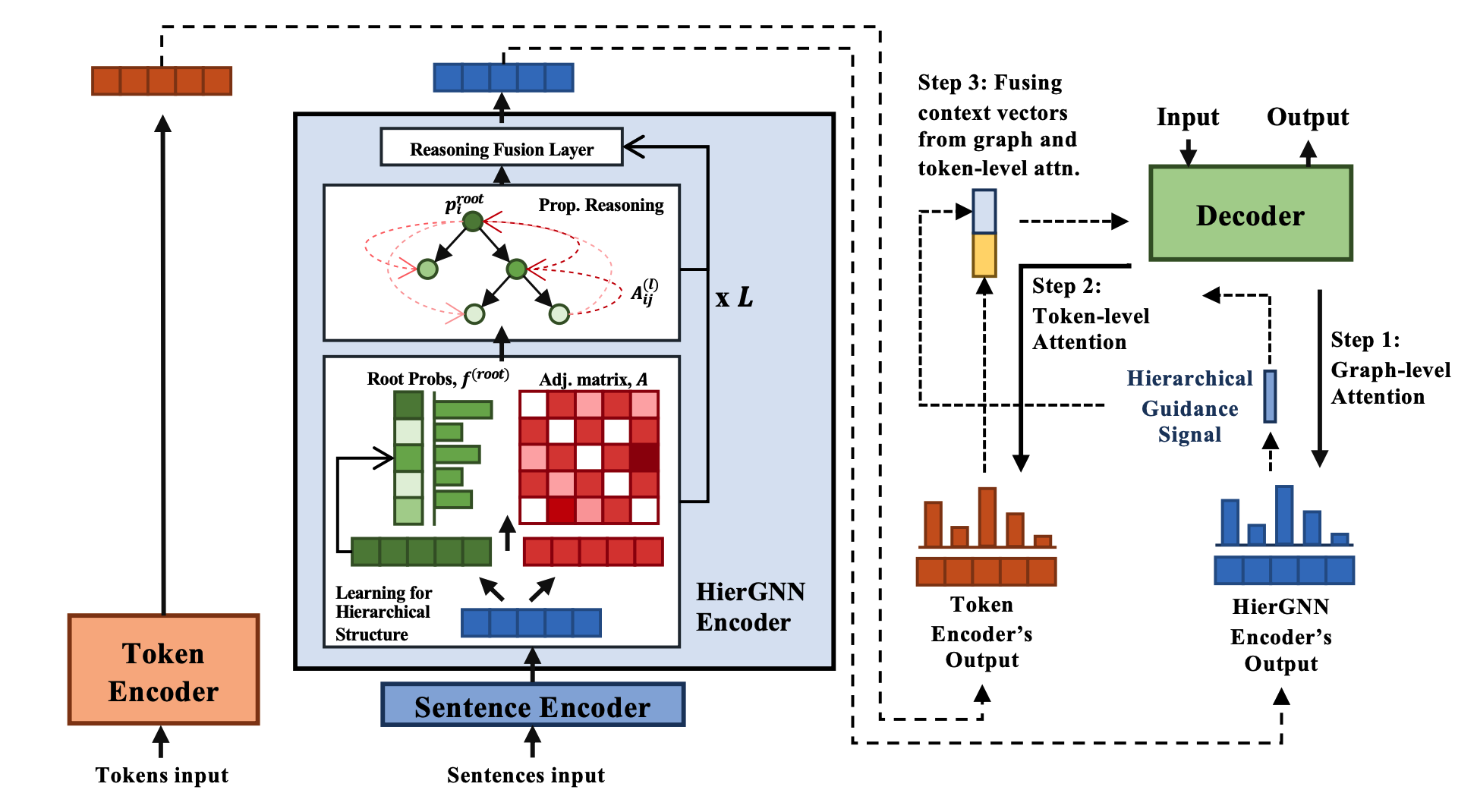

Hallucination mitigation — designing interventions and evaluation frameworks to diagnose and reduce hallucinations in LFM. (🍄 SEA, mFACT, ICR2, TWEAK)

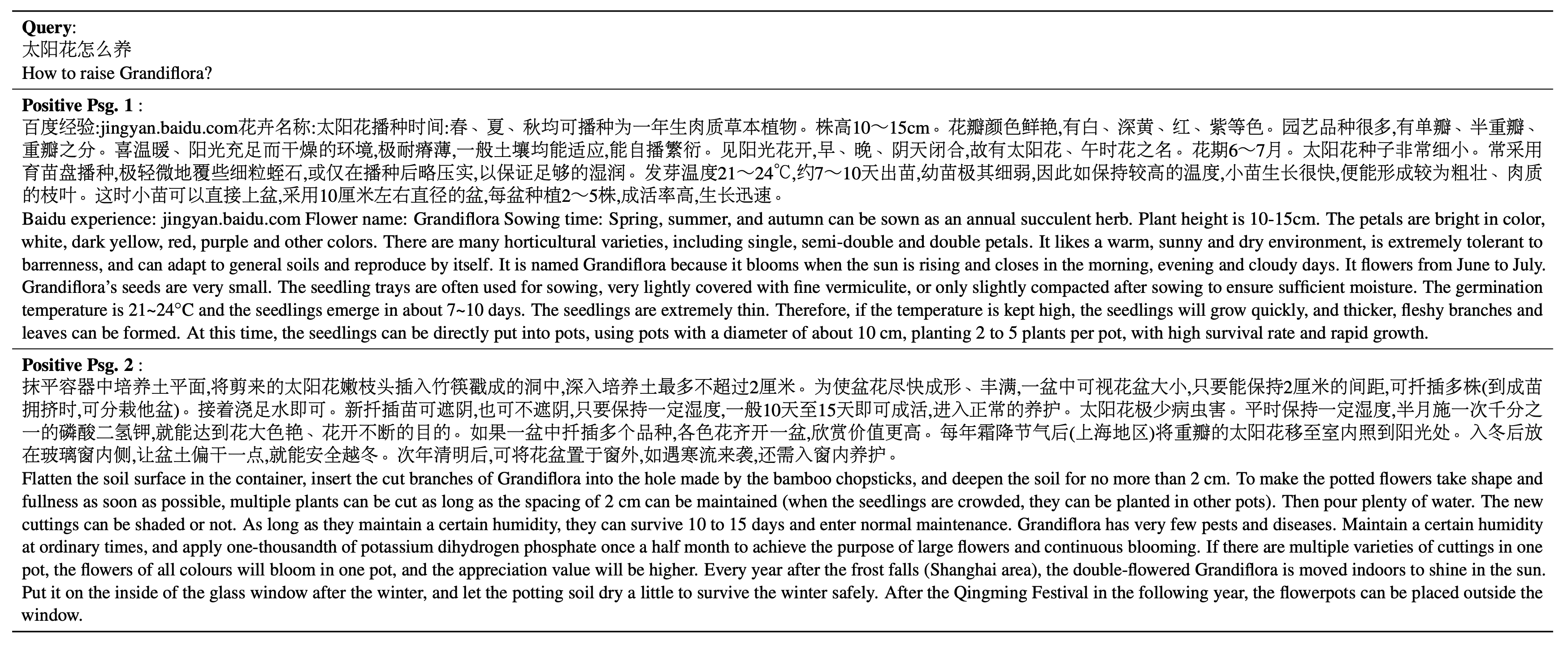

I have multiple first-author publications at leading AI venues, including ICLR, NeurIPS, ACL, EMNLP, and NAACL. My research is complemented by industry collaborations with Google DeepMind, Meta FAIR, Apple AIML, NVIDIA Research, and Baidu NLP, spanning both fundamental and applied foundation model research.

I am on the 2026 job market for industrial research scientist positions. I am actively looking for positions based in 🇨🇳/🇬🇧/🇨🇭/🇺🇸/🇪🇺/🇸🇬.

News

- Feb 2026 My paper on omnimodal foundation model is accepted to ICLR 2026! 🎉 See you in Cidade Maravilhosa 🇧🇷

- Dec 2025 Bootstrapping World Model paper is accepted to NeurIPS 2025 LAW Workshop! 🎉 See you in San Diego 🇺🇸

- Dec 2025 One paper about Safety Editing for LLMs is accepted to EMNLP 2025! 🎉 See you in Suzhou 🇨🇳

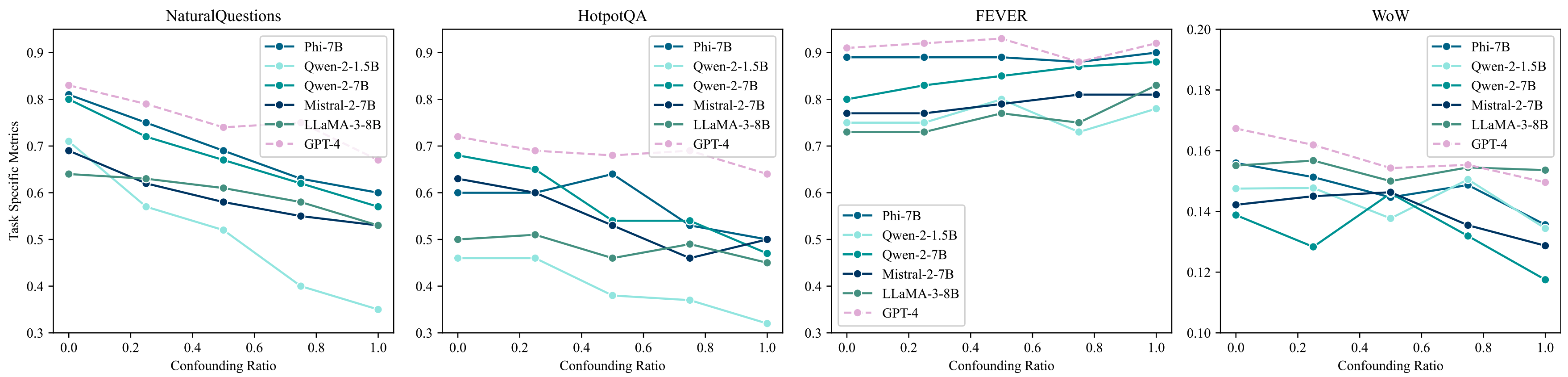

- Jul 2025 My paper on In-context Retrieval and Reasoning for LLMs is accepted to ACL 2025! 🎉 See you in Vienna 🇦🇹

- Jun 2025 I will be interning at Meta FAIR in Paris 🇫🇷 this summer! Bonjour 🥐

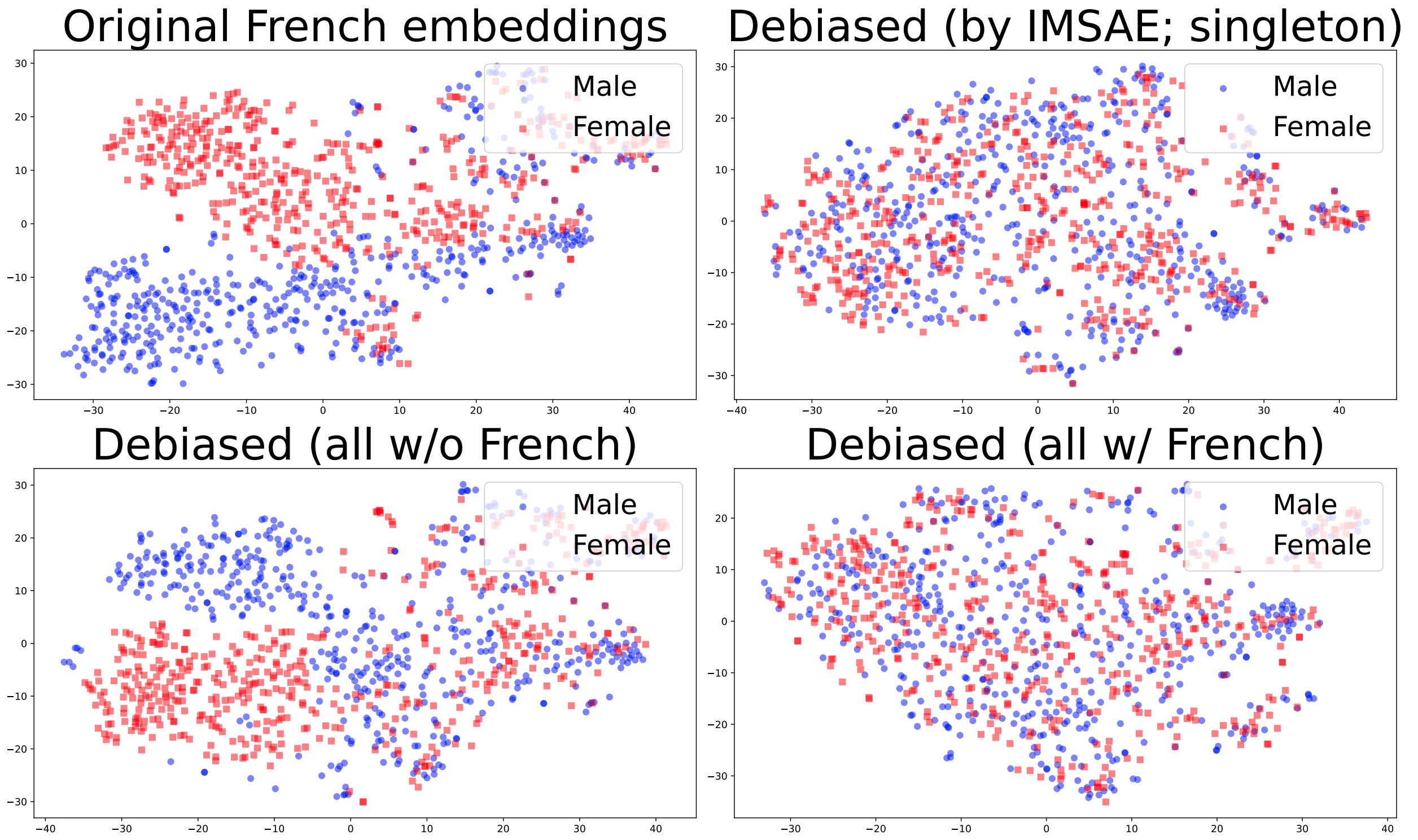

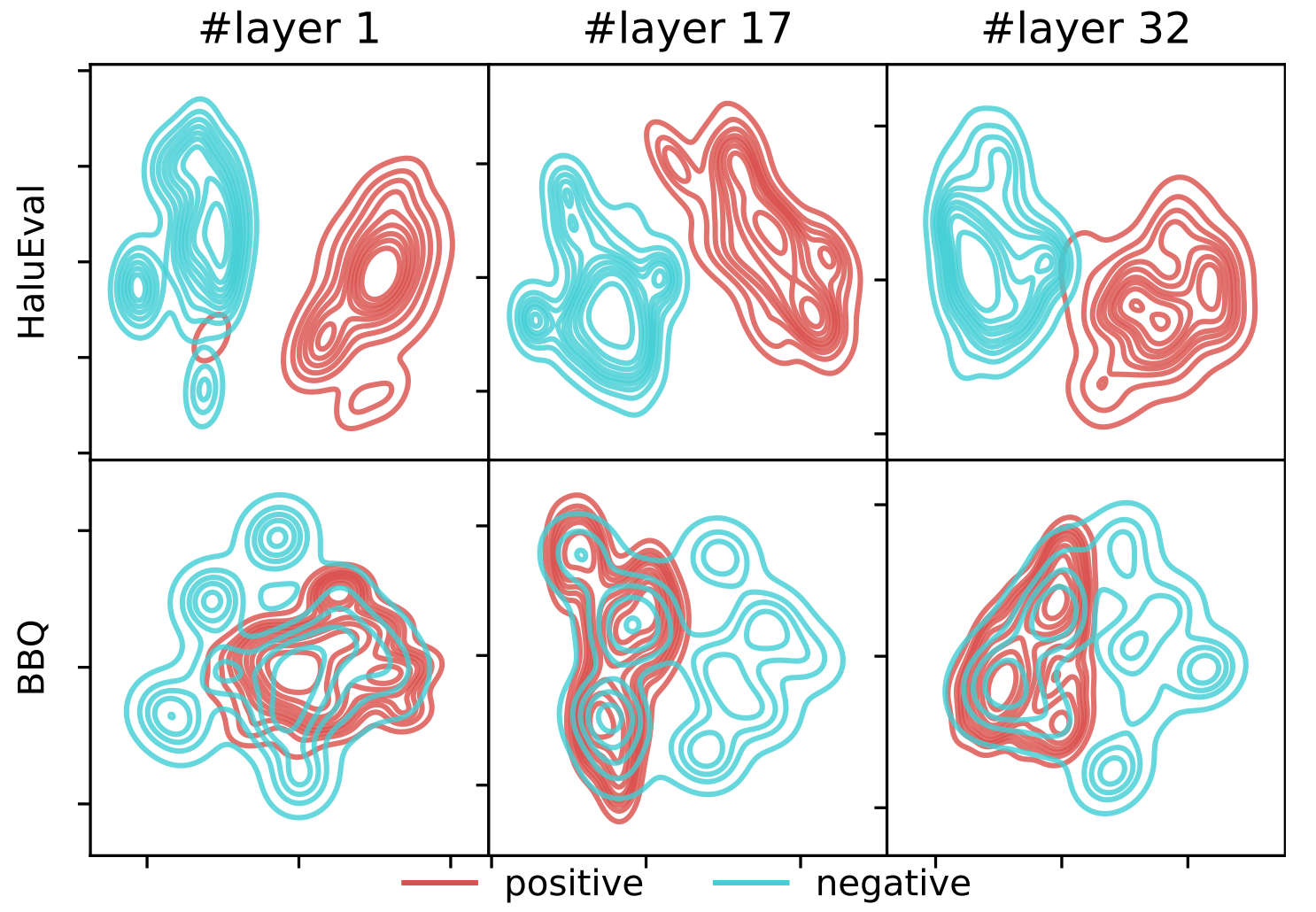

- Dec 2024 One paper on Spectral Editing of LLM Activations is accepted to NeurIPS 2024! 🎉 See you in Vancouver 🇨🇦

- Jul 2024 Two papers on Language Grounding and Hallucination are accepted to NAACL 2024! See you in Mexico 🇲🇽

- Jun 2024 I will be back to Apple AIML & MLR in Seattle 🇺🇸 this summer! Already miss the Mt Rainier 🏔️🍒

- Dec 2023 My paper on Mitigating Hallucinations is accepted to EMNLP 2023! 🎉 See you in Singapore 🇸🇬

- Jun 2023 I will be interning at Apple AIML in Seattle 🇺🇸 this summer! Hello ☕

- Feb 2023 I was awarded the Apple AIML PhD Fellowship for 2023!

- Dec 2022 Two papers are accepted to EMNLP 2022! 🎉 See you in Abu Dhabi 🇦🇪

- Nov 2021 I will be interning at Baidu (NLP Department) in Beijing 🇨🇳 this winter! 你好 🐼

Professional Experience

Education

-

PhD in Natural Language Processing2022-2026

-

MSc in Cognitive Science2020-2021

-

BSc in Computer Science2016-2020

Selected Publications